The Power Challenge in AI Computing

The digital age is defined by the explosive growth of data centers, vast engines of computation that power everything from social media to scientific discovery. At the heart of this revolution—particularly with the rise of Artificial Intelligence (AI) applications—lies a fundamental bottleneck: power. The premise is simple: AI runs on computing and computing runs on power. As these computational environments grow denser, packing more processing might into smaller spaces, the demand for energy is soaring. This intensification is significant, as data center electricity use is projected to double by 2030. This renders traditional power architectures obsolete, creating an urgent need for a new design that can keep pace with AI's seemingly endless appetite for electricity.

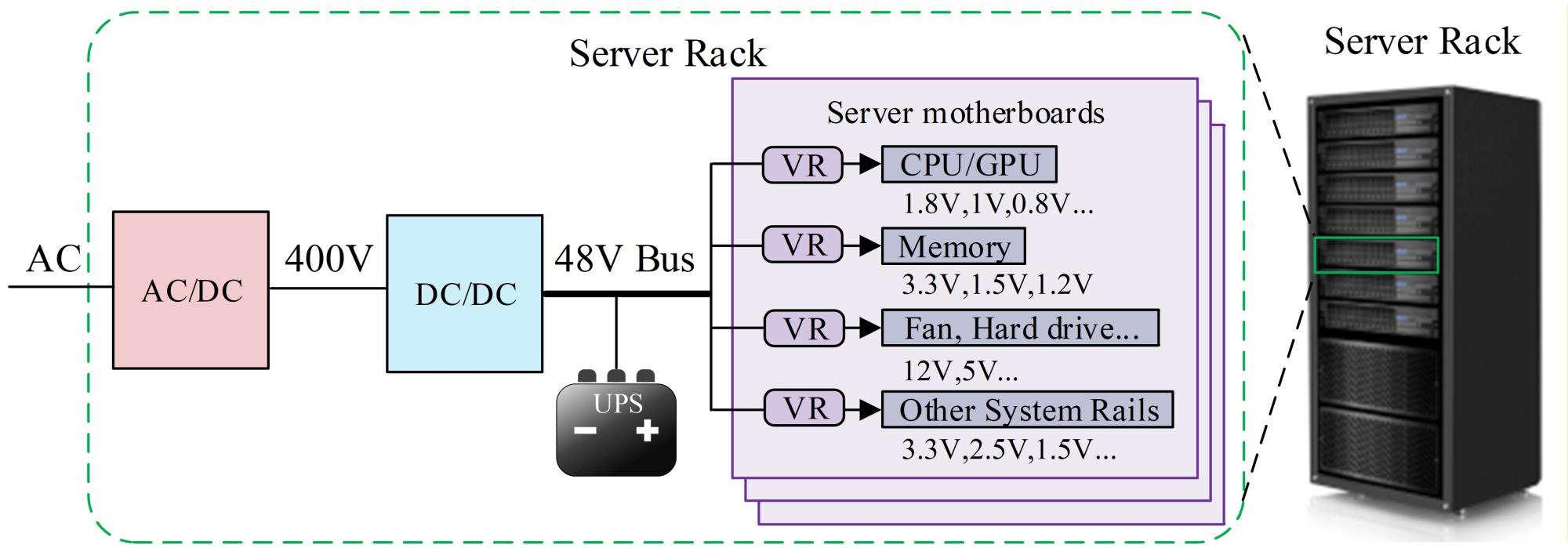

Current systems often involve multiple stages of voltage conversion, resulting in energy loss at each stage. Researchers focused on creating a more direct and efficient power supply: a High-Efficiency 380V-to-48V LLC Converter. This conversion ratio is essential for the emerging 48V bus power architecture in data centers, which is designed to minimize overall power loss across the facility.