No recent issue has roiled education more than artificial intelligence (AI). Especially since the rise of generative AI three years ago, educators have struggled to determine how and whether to allow AI into the classroom, and how it might be taught and used for learning. Even as academics have wrung their hands, however, the business world has had no such compunctions. Across the world, companies have embraced AI with both hands, integrating new tools into their workflows to improve innovation and efficiency.

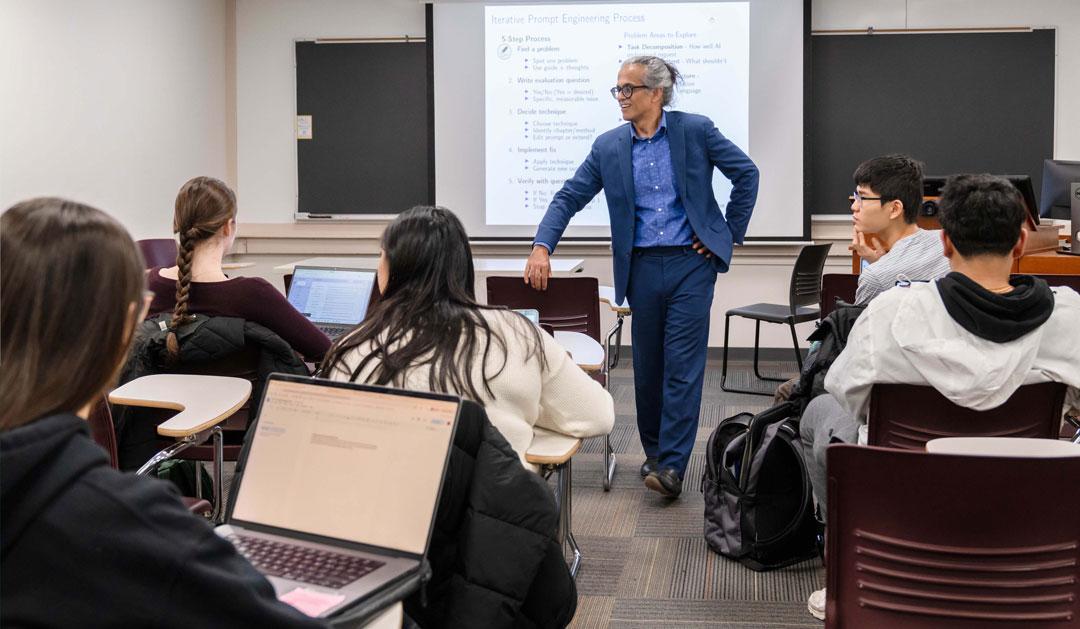

Higher education has come to an inflection point. If universities continue to vacillate on allowing AI into the curriculum, they risk leaving students behind in preparing for the world they’ll soon enter. Recognizing that fact, Lehigh has been on the forefront of embracing AI as a force reshaping not only what students learn, but also how the university teaches. Starting in early 2023, the university announced resources and tools to help faculty members integrate AI into their classrooms. In October, the university announced a new “AI Readiness” initiative to prepare students for an AI-driven future.

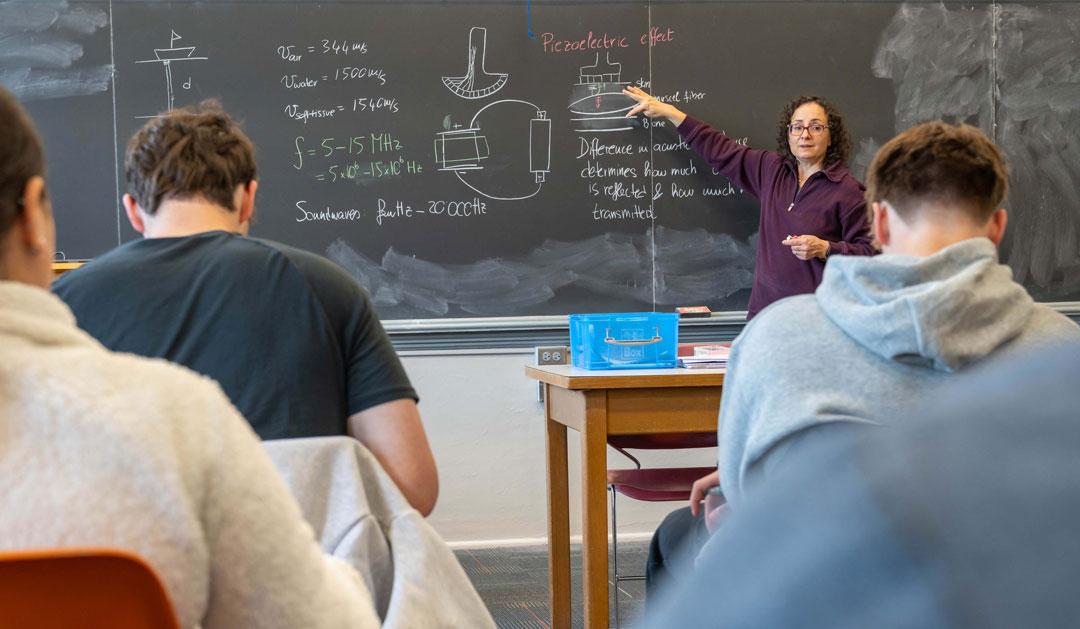

The new initiative takes a campus-wide, multidisciplinary approach to AI to prepare every student, regardless of major, to use its tools. From faculty experimenting with AI tutors and other classroom supports to AI trainings that provide students with the knowledge and skills needed for the working world, Lehigh is embedding AI into every facet of academic life, positioning Lehigh as a leader in AI education and research. According to Nathan Urban, provost and senior vice president for academic affairs, the goal is threefold: to teach about AI, to teach with AI and to teach ethical use of AI.