Will the Real J.S. Bach Please Step Forward?

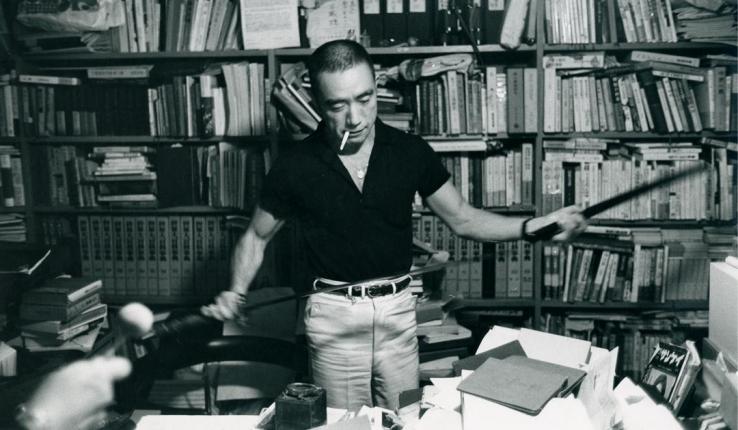

Collins’s algorithm generates a credible likeness of a Bach chorale such as “Schmucke dich, o liebe seele” (“Deck thyself, my soul, with gladness”) but is less adept with Chopin’s mazurkas. (Photo by Neha Kavan)

Twenty years have passed since Deep Blue defeated Garry Kasparov and became the first computer to win a chess match against a world champion. Since then, the gap between machine and man, for well-defined tasks like chess, has widened with the development of more powerful computer chips and software.

In the area of human creativity, the picture is less clear. Google last year announced Magenta, a project that seeks to teach machines to create “compelling” works of music and art. But some experts caution that creativity is a mystery, almost impossible to define or explain, let alone quantify or replicate.

Tom Collins has a different goal: to use algorithms and computer software to enhance human creativity and enable more people to participate in music.

“Sometimes the type of work we do is described in fear-mongering tones,” says Collins, a visiting assistant professor of psychology, “as if computers are taking over. I don’t see it like that.

“What we’re trying to accomplish is more about what humans can do with computers to enrich their lives and make their own creations.”

Collins and his colleague, Robin Laney of the Open University in England, have developed an algorithm called Racchmaninof-Jun2015, which generates music in specifiable styles. (Racchmaninof stands for RAndom Constrained CHain of MArkovian Nodes with INheritance Of Form, thus its spelling differs from that of Sergei Rachmaninov, the composer.)

Collins and Laney have programmed Racchmaninof to generate music emulating the style of four-part chorales, or hymns, by Johann Sebastian Bach (1685-1750) and the style of mazurkas, or Polish dances, by Frederic Chopin (1810-49).

The researchers asked human test subjects with an average of 8.5 years of music training to listen to excerpts of the Bach and Chopin pieces and to samples generated by Racchmaninof and to determine which was which and to rate the excerpts’ stylistic success. The recordings of the pieces were generated by computer on a piano with no expression or phrasing.

The results, the researchers reported recently in the Journal of Creative Music Systems, were promising. When comparing authentic Bach chorales (such as “Schmücke dich, o liebe seele”) with computer-generated chorales, the researchers wrote, “only five out of 25 participants performed significantly better than chance at distinguishing Racchmaninof’s output from original human compositions.

“In the context of relatively high levels of musical expertise, this difficulty of distinguishing Racchmaninof’s output from original human compositions underlines the promise of our approach.

The results with the Chopin pieces were not as encouraging—16 of the 25 participants could tell the difference between Chopin mazurkas (e.g., opus 24, number 1 in G minor) and Racchmaninof samples.

“We’ve done quite well with the Bach hymns,” says Collins, “but less so with the Chopin. It’s unclear to me whether that’s because Chopin’s music is inherently harder to emulate or because the phrasing in the Chopin is more complicated and we don’t have it as well encoded. So it could be that there is the potential to emulate Chopin quite convincingly but we need to improve our encoding of the phrases in that data.”

Collins will discuss his research tomorrow when he gives a presentation titled “Music Cognition: What Computational Models Can Tell Us” at 4:10 p.m. in Room 290 of the STEPS building. The event is part of a series of events titled Music of the Mind: Diversity in Learning, which is sponsored by the departments of music and psychology and the cognitive sciences program. The series is part of the Join the Dialogue program of the College of Arts and Sciences.

From d’Arezzo to Markov

Collins has studied classical piano and played guitar in rock and indie bands. He has two bachelor’s degrees—in music from Robinson College, Cambridge, and in mathematics and statistics from Keble College, Oxford. He holds a Ph.D. in computer science from the Open University.

Human beings, says Collins, have endeavored for centuries to codify the process of creating music.

“Our work has a long history. You can take it back to the Enlightenment, when people would have musical dice games where they rolled the die to concatenate bars of existing music to make a new minuet.

“You could go back even further to someone named Guido d’Arezzo [the Italian musician (990-1050) credited with inventing the Western musical notation system], who wrote what’s agreed to be the first algorithm for composing music.”

In his work, Collins borrows from the Russian mathematician and probability theorist Andrei Andreyevich Markov (1856-1922), whose models, he says, incorporate temporal dependencies.

“Markov’s model says that a state has certain probabilities of transitioning to a set number of other states,” says Collins. “In language, for example, you could model someone’s utterances in a Markov model. If someone says the words ‘what comes up’, the word ‘up’ might go to the word ‘next’ with high probability or to the word ‘tomorrow’ with slightly less probability.

“If you apply this model to music, you can get relatively sensible sequences of notes over the length of one measure. Any longer than that and you start to hear, if you know the style that’s being imitated, that the material is wandering in a way that music by songwriters or composers does not.”

When humans compose music, says Collins, they employ structures and patterns that are identifiable and repetitive, as well as pertinent harmonic and thematic relationships. They begin and end compositions in the same key while also modulating to other keys, they develop and vary themes and motives, and they give phrases a clear beginning and end.

Collins and Laney programmed Racchmaninof to extract the repetitive structure of an existing piece in the target style (called a template), taking care to borrow only that structure and not any specific notes.

“If you’re listening to a piece generated by computer, it should be rare if you’re able to say, ‘This is the structure of a specific piece,’ because the nature of the borrowed structure is very abstract.”

Collins and Laney programmed Racchmaninof by feeding it a template chorale and 50 other chorales that had first been “chopped up” into chord-like events. To generate events in a new chorale, Racchmaninof uses the Markov model to determine how one state—one note or chord---should transition to the next.

“To define state,” says Collins, “we take the pitches relative to the tonic and then ask what beat of the measure a note or chord exists on. If a chord falls on the final beat of a measure and is moving to the next measure, there might be differences in terms of what comes next compared to that same chord falling on the first beat of a measure.”

Beyond beguiling trained musicians into mistaking computer-generated music for a work by Bach, Collins sees useful applications for his research.

“It’s challenging to generate short pieces of music but it has no huge use beyond saying you can do it. So we’ve tried to develop more iterative and interactive versions of this work to provide an interface to people who are composing music. The goal is to help people learn how to compose in different styles, follow in the footsteps of different composers, or simply press a button for an interesting suggestion when they get stuck and don’t know where to go next.”

Another goal, says Collins, is to enable more people to participate in music.

“I got along in music mainly by having individual, one-on-one instruction. That’s obviously fairly expensive so one of the questions I have is, ‘Can we produce technology that helps people study music who wouldn’t otherwise have the money to pay for one-on-one instruction?’

“Research shows that engagement in traditional music activities improves or at least offsets deficiencies in children’s numeracy and literacy skills. It might be taking this too far to say that our work can help but maybe that’s something we’ll be able to show eventually.”

Will algorithms ever become sophisticated enough to write Beethoven’s 10th Symphony or Mozart’s 42nd?

“I do envisage such a day,” says Collins, “but it is perhaps more difficult to envisage a day when such a work is also critically acclaimed.”

The Music of the Mind series continues on Thursday, April 20, when Andrea R. Halpern, professor of psychology at Bucknell University, will give a talk titled “Music Cognition in Healthy Aging and Neurodegenerative Disease.”

The series will culminate with two concerts, on Friday and Saturday, May 5 and 6, by Lehigh University Choral Arts, which will be joined by Joyful Noise, a special needs choir based in New Jersey. The concerts will begin at 7:30 p.m. on May 5 and 4 p.m. on May 6 under the direction of Steven Sametz, the artistic director of Choral Arts and the Ronald J. Ulrich Professor of Music at Lehigh.

Story by Kurt Pfitzer

Posted on: