What's Your Data Privacy Style?

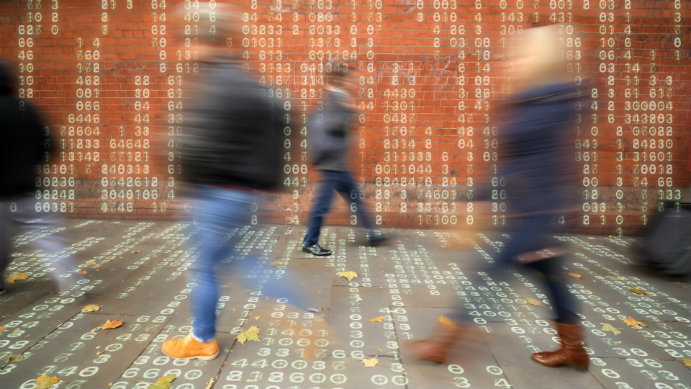

Professor Eric P. S. Baumer’s new NSF-funded study will examine data privacy styles with an eye toward understanding how to design human-centered technology to support various user approaches to data privacy. Image credit: iStock/peterhowell

Internet search engines are a treasure trove of user data. Researchers have estimated that more than 4 million search queries are entered into Google every minute.

The use of computer algorithms that can make inferences from user data about a person’s gender, age, political opinions, religious affiliations and other traits is widespread, which has raised serious privacy concerns.

How are individual internet-users approaching personal privacy protection in this algorithm-rich environment?

“Such behaviors are poorly accounted for in existing technology design practices,” says Eric P. S. Baumer, assistant professor of computer science and engineering.

Baumer was recently awarded a grant by the National Science Foundation through its Division of Computer and Network Systems to study how people navigate a world in which data-collection is a continuous feature of their environment and how internet systems can be better designed to support “data literate” behaviors. The award is a collaborative grant with Andrea Forte, associate professor of information science at Drexel University.

The study seeks to fill a gap in knowledge about the various ways individuals approach personal privacy protection. The aim is to gain a better understanding of human-computer interaction with an eye toward technology design aimed at accommodating and supporting different privacy styles.

According to Baumer, more than 3.5 million people worldwide are estimated to have internet access, and most have access to search engines.

The pervasiveness of such analytic systems, he says, makes it highly impractical if not impossible to be a non-user of “big data,” either generally or of specific technologies like search.

“Rather than complete non-use, those who object to practices of big data, such as analysis of search histories, instead employ various types of avoidance practices,” says Baumer. “Research has shown that these practices are driven not by ignorance or fear but by varying types of literacies about data collection and analysis.”

Some approaches, he says, involve selective or limited opting out. Others focus on concealing information, such as through encryption of web traffic. Still others use strategies for obfuscating their personal data.

The study will be conducted in three phases. In the first phase, through a series of surveys, Baumer and his team will develop survey instruments to assess respondents’ “privacy styles.” This will include determining demographic differences between algorithm avoiders and other internet users as well as identifying various types of avoiders and what distinguishes them from each other.

“Rather than assessing privacy ‘literacy’, which is often framed either as high or low, our approach will identify different sets of strategies that people use to negotiate privacy concerns,” says Baumer.

The second phase will consist of qualitative fieldwork with users of avoidance tracking technologies to determine what algorithm avoidance looks like in practice. The project team will seek to identify the different strategies avoiders employ, as well as their perspectives on the effectiveness of these practices.

Finally, Baumer will create a collection of experimental prototype tools to explore the design space around algorithmic privacy. The goal is to answer the question: How can designers create systems that reflect and support users’ various data privacy approaches?

Ultimately, Baumer hopes the proposed privacy styles instruments could be useful to a variety of different disciplines, including human-computer interaction, communication, sociology, media studies and others.

“Collectively, this work will expand our technology design vocabulary in terms of being able to account for different styles of privacy practices,” says Baumer. “It also has the potential to significantly improve privacy policies and technologies by describing the specific challenges that people face as they attempt to protect their privacy.”

Posted on: