“In robot manipulation, learning is a promising alternative to traditional engineering methods and has demonstrated great success, especially in pick-and-place tasks,” says Cui, whose work has been focused on the intersection of robot manipulation and machine learning. “Although many research questions still need to be answered, learned robot manipulation could potentially bring robot manipulators into our homes and businesses. Maybe we will see robots mopping our tables or organizing closets in the near future.”

In a review article in Science Robotics called “Toward next-generation learned robot manipulation,” Cui and Trinkle summarize, compare and contrast research in learned robot manipulation through the lens of adaptability and outline promising research directions for the future.

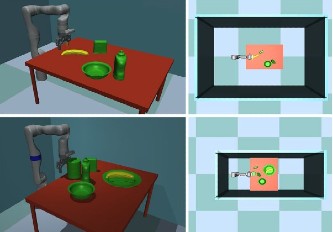

Cui and Trinkle emphasize the usefulness of modularity in learning design and point to the need for appropriate representations for manipulation tasks. They also note that modularity enables customization.

Cui says that those in traditional engineering may doubt the reliability of learned skills for robot manipulation because they are usually ‘black-box’ solutions, which means that researchers may not know when and why a learned skill fails.

“As our paper points out, appropriate modularization of learned manipulation skills may open up ‘black-boxes’ and make them more explainable,” says Cui.

The nine areas that Cui and Trinkle propose as particularly promising for advancing the capacity and adaptability of learned robot manipulation are: 1) Representation learning with more sensing modalities such as tactile, auditory, and temperature signals. 2) Advanced simulators for manipulation so they are able to be as fast and as realistic as possible. 3) Task/skill customization. 4) “Portable” task representations. 5) Informed exploration for manipulation in which active learning methods can find new skills efficiently by exploiting contact information. 6) Continual exploration, or a way for a learned skill to improve continually after robot deployment. 7) Massively distributed/parallel active learning. 8) Hardware innovations that simplify more challenging manipulations such as in-hand dexterous manipulation. 9) Real-time performance since, eventually, learned manipulation skills will be tested in the real world.

Following some of these directions, Cui and Trinkle are currently working on tactile-based sensorimotor skills to make robot manipulators more dexterous and robust.

For Cui, among the most exciting discoveries he made while exploring the current research is that learned robot manipulation is still in its infancy.

“That leaves many opportunities for the research community to explore and thrive on,” says Cui. “The promising future and the vast space for exploration will make learned robot manipulation an exciting area of research for decades to come.”

To learn more about their work, read: Advancing Robotic Grasping, Dexterous Manipulation & Soft Robotics.