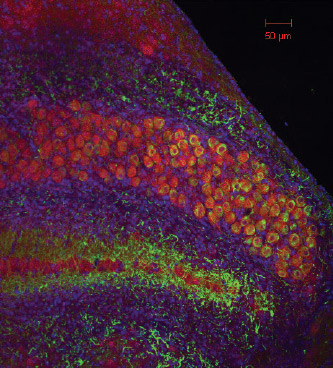

The unique arrangement of how the brain breaks down complex sound waves into different frequencies is called tonotopy, and this tonotopic organization is repeated anywhere sounds are processed in the brain. Hair cells at one end of the cochlea detect low-frequency sounds, and those at the other end detect high-frequency sounds. These are eventually translated by the human brain as pitch.

But how do the brain cells know what type of frequency to detect? Neuroscientist R. Michael Burger and his team are working to find out.

“We know that tonotopy exists in the brain and that it’s repeated in every brain structure, but the question is, does a high-frequency neuron in the brain know that it’s a high-frequency neuron because of where it resides in the brain—which is one possibility—or does the ear tell it to become a high-frequency cell because of the type of input it’s getting?” asks Burger.

An understanding of this process could help guide interventions for individuals with hearing loss.

Different Neurons, Different Behaviors

Neurons deliver information about sound to the cochlear nucleus—the first synapse in the brain—and the cells in the cochlear nucleus begin to compute where sound is coming from by precisely encoding the arrival time of sound at the two ears. The brain can compute this very small difference in time—less than a millisecond from one ear to the other, at most, says Burger. “So the parts of the brain that process that information and compute that information have to represent time very, very precisely.”

In publications in The Journal of Neuroscience in 2014 and 2016, Burger and his colleagues identified the importance of timing precision to the neurons in the cochlear nucleus. These neurons fire randomly in the absence of sound, but when a sound is detected, they fire in an organized manner at a particular phase of periodic stimulus or sound wave. This is called phase-locking.

“[The neurons] basically lock themselves to a particular phase of the signal,” explains Burger.

“And if you present that tone over and over again, you get the same output time and again: The neuron fires the same way every time you play that sound, and it does so with such little error that the cells that are comparing the two sounds from the two ears are trying to match those phase-lock discharges with each other from each ear.”

Low-frequency sound waves, which are longer, make it easier for a neuron to phase lock. The shorter high-frequency sound waves, however, happen more quickly, giving neurons less time to react. “The period is maybe half a millisecond or less, that is, half of a thousandth of a second or less. The neuron just has to instantly decide if it’s going to fire or not,” says Burger.

“So you could think of this tonotopic arrangement as a computational difficulty gradient. It’s easy for [low-frequency] cells to do their job, and it’s hard for [high-frequency] cells. So we found properties of these cells and the inputs to them that help the high-frequency cells be better at their job.”

Burger and his team are finding answers with the help of chickens.

The Role of BMP-7

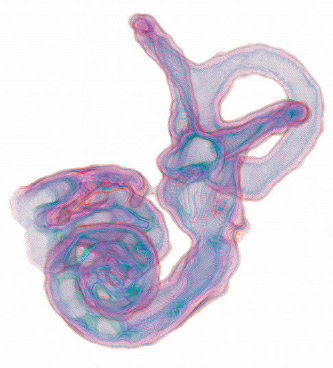

Although the fundamentals of tuning in a chicken’s ear differ slightly from those of mammals, the role of hair cells in tuning in the chicken gives the team an experimental advantage. Chickens utilize tonotopic organization and carry it throughout all of their auditory structures, just as mammals do. However, the cochleae of chickens are straight rather than snail-shaped, making it easier to observe the gradient of hair cell properties.

“In the birds, the hair cells are electrically tuned to resonate different frequencies the same way you turn the dial of an old-fashioned radio and change the electrical tuning of the capacitors in the radio to find your favorite station,” says Burger.

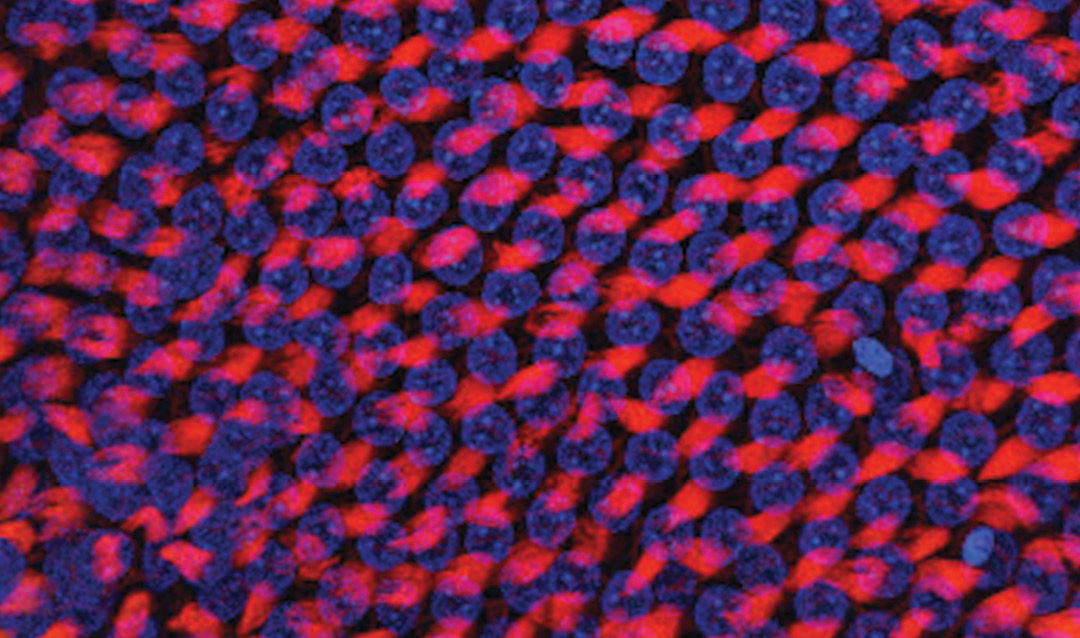

Doctoral student Lashaka Jones observes the difference between low- and high-frequency brain cells by attaching electrodes to neurons in chick brain slices and controlling the electrical environment of the cell. Because the neurons are arranged tonotopically, Jones knows in advance which type of brain cell she is examining. She injects them with ramps of excitatory current and observes their responses, which are markedly different.

Burger explains: “A high-frequency cell needs a very rapid excitation to get it to fire. If the excitation is too slow, it will fail. So, basically, the neuron is setting a threshold that says, ‘If my input is not fast enough, I’m not going to fire.’”

The high-frequency cell, Burger says, does not want to fire from poor information and miss good information elsewhere. The low-frequency cell, in contrast, “is much more tolerant of slow excitation before it will fire. … These properties, the speed tolerance of excitation between these types of neurons, is extremely statistically robust.”

Matthew Kelley, a collaborator of Burger’s at the National Institutes of Health, inspired the next steps of the team’s research. Kelley was studying the development of tonotopy in the cochlea and discovered that a protein involved in developmental processes plays a key role—bone morphogenic protein (Bmp-7).

“If a developing hair cell gets a high dose of Bmp-7, it will think it’s a low-frequency cell and it will grow up to be a low-frequency cell,” explains Burger. Another protein, chordin, does the opposite—it directs the growth of high-frequency cells. In giving a developing ear too much of either protein, researchers can cause all cells in a particular location to develop as either high- or low-frequency.

Supported by a Lehigh Faculty Innovation Grant (FIG), Jones received training at the National Institutes of Health to learn how to insert a plasmid—a small strand of DNA—into the developing chick embryo’s ear to manipulate the expression of Bmp-7, a difficult technique that has helped to advance the team’s research.

Jones cuts a tiny hole in an egg with a 2-day-old chicken embryo inside. She then injects the plasmid into a ball of cells—one of the chick’s developing ears—and closes the egg back up. The chick will continue growing normally with one exception: It will have one normal ear and one ear with only low-frequency hair cells.

“Our manipulation is working,” says Jones. “We are creating a chicken that essentially is only going to be hearing low-frequency sounds in one ear.”

Similarly, if Jones did the same experiment using chordin, she says, she could create an all-high-frequency ear.

This gets to the heart of the team’s central question: Is a high-frequency neuron in the brain determined by its location in the brain or by the type of input it’s receiving?

The answer, it seems, is the latter.

“[Jones] can give what would be a high-frequency cell a low-frequency input. And what she’s seeing is that indeed they’re shifting in the low-frequency direction. So the ear does appear to have an instructive role in setting up the tonotopy in the brain,” Burger says.